Humans are now closer to seeing through the eyes of animals, thanks to an innovative software framework developed by researchers from the University of Queensland and the University of Exeter.

PhD candidate Cedric van den Berg from UQ’s School of Biological Sciences said that, until now, it has been difficult to understand how animals really saw the world.

“Most animals have completely different visual systems to humans, so – for many species – it is unclear how they see complex visual information or colour patterns in nature, and how this drives their behaviour,” he said.

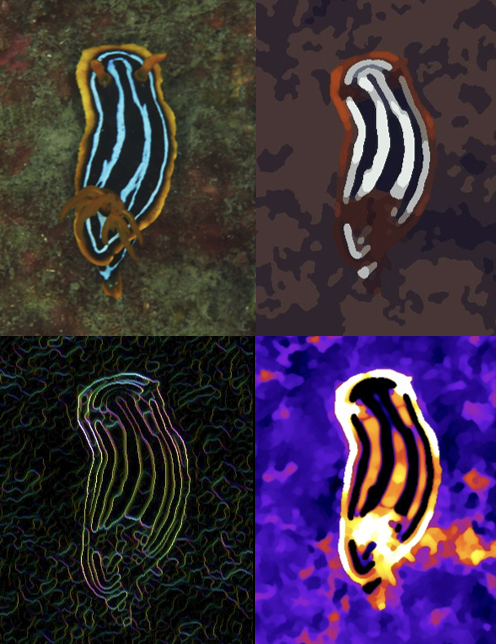

“The Quantitative Colour Pattern Analysis (QCPA) framework is a collection of innovative digital image processing techniques and analytical tools designed to solve this problem.

“Collectively, these tools greatly improve our ability to analyse complex visual information through the eyes of animals.”

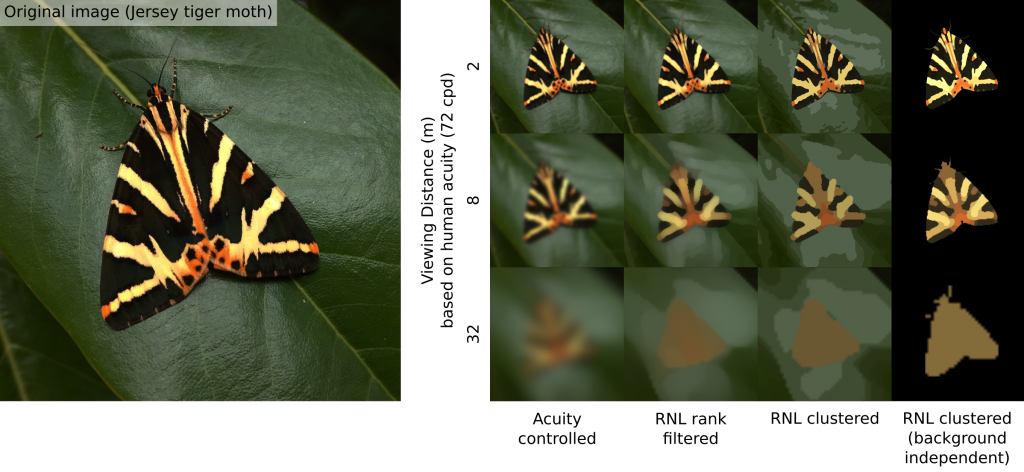

Warning colours can actually become the perfect camouflage when viewed at certain distances. This Jersey tiger moth is warning potential predators that it tastes bad. But predators will only be able to see these warnings up-close. From far away the colours blend together to make the perfect camouflage. The high-contrast colours of many species compliment each-other to create this effect from a distance.

Dr Jolyon Troscianko the study’s co-leader from the University of Exeter said colour patterns have been key to understanding many fundamental evolutionary problems, such as how animals signal to each other or hide from predators.

“We have known for many years that understanding animal vision and signalling depends on combining colour and pattern information, but the available techniques were near impossible to implement without some key advances we developed for this framework.”

The framework’s use of digital photos means it can be used in almost any habitat – even underwater – using anything from off-the-shelf cameras to sophisticated full-spectrum imaging systems.

“You can even access most of its capabilities by using a cheap (~ $110 AUD, £60 GBP, $80 USD) smartphone to capture photos,” Dr Troscianko said.

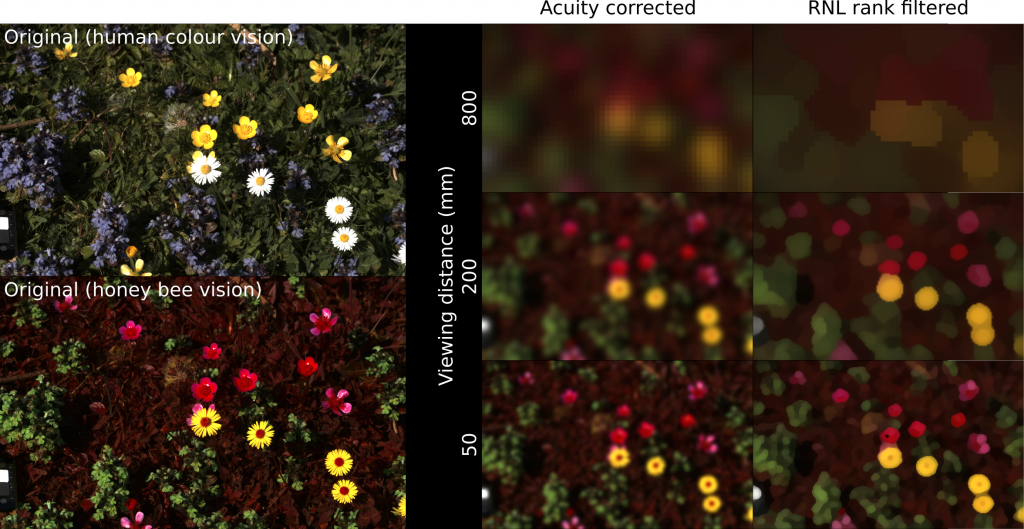

Bees not only see the world in different colours (green, blue and ultraviolet instead of red, green and blue like humans), but they also have much lower spatial acuity. This means they can’t see as much detail as we can. In fact we can see things about 150 times smaller (or further away) than bees. This figure shows how wildflowers will appear to bee colour and spatial vision from different distances. The flowers will barely be visible to a bee beyond one metre away.

It took four years to develop and test the technology, which included the development of an extensive interactive online platform to provide researchers, teachers and students with user-guides, tutorials and worked examples of how to use the tools.

UQ’s Dr Karen Cheney said the framework can be applied to a wide range of environmental conditions and visual systems.

“The flexibility of the framework allows researchers to investigate the colour patterns and natural surroundings of a wide range of organisms, such as insects, birds, fish and flowering plants,” she said.

“For example, we can now truly understand the impacts of coral bleaching for camouflaged reef creatures in a new and informative way.”

“We’re helping people – wherever they are – to cross the boundaries between human and animal visual perception.”

“It’s really a platform that anyone can build on, so we’re keen to see what future breakthroughs are ahead.”

Wow!